From Map Packs to Machine Perception

Local SEO in an AI-first world of Meta Ray-Bans, TikTok Maps, and spatial discovery models.

I’m not the only voice in local SEO, nor should I be.

There’s a deep bench of smart, more experienced professionals shaping this space—each bringing different strengths to the table. In the U.S., Joy Hawkins (@JoyanneHawkins), Greg Gifford (@GregGifford), Ben Fisher (@TheSocialDude), Mike Blumenthal (@mblumenthal), and Andrew Shotland (@localseoguide) have all played pivotal roles in shaping how we think about local strategy, visibility, and trust. In Canada, Darren Shaw (LinkedIn), Nyagoslav Zhekov (@Nyagoslav), Dana DiTomaso (LinkedIn), and Colan Nielsen (@ColanNielsen) have helped push the conversation forward with deep insights into data, multi-location frameworks, and evolving platform behavior. Outside the US and Canada, Claire Carlile (@clairecarlile) has my attention and continues to deliver practical, technical guidance that resonates far beyond her home market.

This piece reflects my own experience and perspective—but these are the kinds of voices I listen to, and I’d welcome their takes on where we’re heading!

Because what’s next is very different.

We’re Not Just Optimizing for Maps Anymore

Local SEO has always adapted to changes in interface and behavior. From early NAP listings to review strategies, citations, structured data, and optimized Google Business Profiles—it’s been about being visible where customers look.

But now, the purpose behind these practices is shifting.

Those foundational signals—your structured data, reviews, photos, and links—still matter. But instead of simply feeding search engines to earn rankings, they now serve as retrieval anchors for AI systems that interpret and respond to human intent across multiple modes: vision, location, audio, behavior.

What used to help you rank now helps you get retrieved by AI.

The Interface Is Changing—Even If the First Attempts Flopped

The next shift in local discovery isn’t happening in search boxes. It’s happening through ambient agents that interpret your environment and suggest actions—without requiring a typed query.

And while it’s tempting to dismiss the early flops, don’t.

Rabbit R1 and the Humane AI Pin launched in 2024 with massive hype. Both devices captured serious interest from consumers who clearly wanted a phone-free way to engage with AI. But both devices fell apart on execution. Reviews were brutal. Daily use plummeted. Sales stalled.

They weren’t failures of concept. They were failures of delivery.

What those launches proved is this: people are open to changing how they discover the world around them. And for local businesses, that opens the door to a very different kind of visibility.

Meta’s Ray-Bans Are Already in the Market—and Evolving

While Apple made headlines at WWDC 2025 with software moves like “Liquid Glass” UI elements—clearly laying the foundation for a future spatial interface—it didn’t ship any glasses.

But Meta did.

The Meta Ray-Ban smart glasses are already in consumers’ hands. They handle photos, video, voice, and real-world interaction cues. And recent price drops point to what’s likely an incoming second generation—with more agentic capabilities baked in.

That matters. Because when those glasses—or any comparable wearable—start suggesting places to go or things to buy, it won’t be based on keywords or categories.

It’ll be based on where you are, what you’re doing, what you’ve shown interest in, and what the AI agent can confirm is relevant in the moment.

Imagine this: “That’s Northern Peak Roasters. They just got their new Ethiopian natural-process beans in—want to reserve a bag?” Or: “Loop Sneaker Club has your size in those retro trainers—should I hold them while you’re nearby?”

That’s not search. That’s ambient, real-time recommendation.

Full disclosure – I have Meta Ray-Bans. I love them. Bought them and immediately had RX lenses added so I can wear them frequently. They are…revolutionary. If you’ll indulge a little pun here, they very much help you see the future clearly and they bring a level of utility to your day that’s otherwise lacking. Not selling them, but I am saying that 2 years later, I still use them frequently and will spend my own money again on a new version when they drop.

Meta’s V-JEPA: The World Model Behind AI’s Physical Intelligence

While smart glasses are reshaping the interface, the real revolution may be happening under the hood — in the models powering those agents. In June 2025, Meta introduced V-JEPA 2, a video-trained world model designed to help AI understand, predict, and reason about physical environments in the way humans do.

Why does this matter for local businesses? Because agents powered by a model like V-JEPA will no longer rely solely on static business listings or photos. They’ll use spatial awareness — understanding where objects are, how people move, and what’s happening in the environment — to make real-time recommendations. That means your storefront, signage, foot traffic, and even the flow of people outside your space could influence whether or not you're recommended. From other people’s photos and videos, to notable street art nearby and even to events in your neighborhood – they are all onramps to bridge where people physically are and them being exposed to your business.

This shift turns physical visibility into digital visibility — and it's coming fast.

The Shift: From Ranking to Retrievability

In this new discovery layer, the goal is no longer to rank at the top of a Local Pack.

The goal is to be easily understood, retrieved, and recommended by an AI assistant that’s seeing the world like a person would—and making decisions on behalf of one.

Your NAP data helps with entity resolution. Your structured schema disambiguates location and category. Your reviews supply sentiment analysis. Your photos ground the agent’s visual model.

This is a new ecosystem of signals—and it builds on what you already have, but expects more from it.

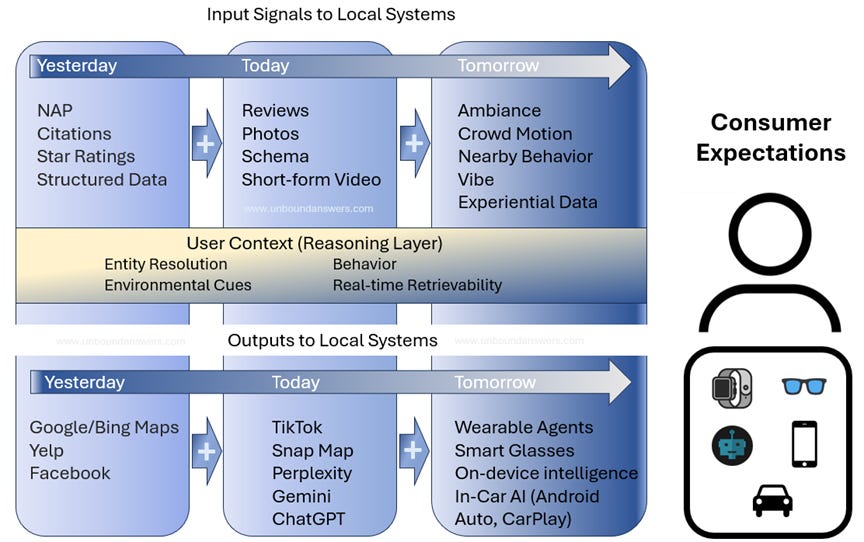

To visualize how the discovery landscape is evolving, here’s a breakdown of how input signals, user reasoning layers, and output platforms are stacking—past, present, and future. The key idea: this isn’t replacement. It’s accumulation. Local visibility now builds layer by layer, and consumer expectations are being shaped by tools that don’t even show you the local pack.

If your strategy is still built entirely on yesterday’s signals and yesterday’s outputs, you're starting to fall behind—you’re becoming invisible to the systems already shaping tomorrow’s recommendations.

New Differentiators for the Next Generation of Local Discovery

Here’s where local businesses can extend their advantage beyond the basics:

· Real-Time Inventory Access

If your business can tell an agent what’s in stock, you’re already ahead. An AI isn’t going to recommend a stop if it can’t confirm the product is available. What used to be a “big brand” feature will soon be a baseline expectation.

· Context-Responsive Storefronts

Make it easy for AI to understand what your space is. Clear signage, visible entry points, and strong branding cues help both humans and machines orient themselves. If a wearable assistant can’t “see” you clearly, you may be left out of the recommendation stack.

· Short-Form Spatial Content

Encourage UGC—short videos, walk-ups, and TikToks—near your storefront. These videos are being indexed, mapped, and used as reference points by AI systems. They’re not just content; they’re breadcrumbs that lead people to you.

· Geofenced Prompting

Set up contextual offers that trigger when someone walks past your door. Whether through push notifications, loyalty apps, or even AR overlays, these real-time nudges will be handled by agents—but you’ll need to supply the data to fuel them.

Emerging Discovery Channels You Can’t Ignore

Not all maps are maps. But they are signals.

The era of relying solely on Google Maps, Bing Maps and Apple Maps is fading. Discovery is now happening across a range of platforms that bypass traditional search entirely—but still lead people to local businesses.

These are some of the interfaces shaping the next generation of local SEO:

· TikTok Location Tags

Users tag businesses in videos, and TikTok surfaces those tags—even if the business didn’t create the content. A single video showing someone walking into your store could generate thousands of views and hundreds of map impressions. TikTok is also quietly testing location-based reviews within tagged videos—feeding contextual information directly into the discovery layer.

· Snap Map and Spotlight

Snapchat’s location-based content makes businesses visible based on proximity and activity. You don’t need to create content—being near popular Snap activity zones is sometimes enough to show up. And, ah…400m monthly active users!

· Android Auto and In-Car Voice Agents

When someone asks for coffee while driving, they aren’t looking at ten results—they’re getting one recommendation. And that decision is based on structured data, traffic flow, proximity, reviews, and retrievability from AI models. Later this year Gemini will come to Android Auto users.

· Meta’s Smart Glasses

As Meta expands its glasses platform, discovery will shift from what people type to what people see. An agent that watches what you look at and listens for cues could become the new gateway to real-world decisions.

These systems aren’t hypotheticals. They’re rolling out or available now.

What Local Businesses Should Do Next

Here’s how to stay ahead in the era of agent-based, real-world discovery. And this isn’t a pick-and-choose menu. You don’t do some of these things, you do all of these things. Think it’s hard now to fight for your space in local SEO? When ai-agents arrive:

Keep your SEO foundations strong.

Your NAP, structured data, reviews, and profiles are still the foundation of visibility. Not going to dive deep here – you know this stuff. Master it because you need to start moving forward again.Make your location camera- and AI-friendly.

Think about how your business looks to someone walking by with a camera—not just a searcher behind a screen. Just being in the background of someone’s video gives these systems information about your business – make it count. Modern optical character recognition systems (OCRs) used in today’s AI can “see” hundreds of logos/second in a video, so be sure your products, brands and logos are clearly displayed.Integrate real-time inventory if possible.

If your products or services are time-sensitive or frequently sold out, exposing that availability is no longer optional. It may be the difference between being recommended and being ignored. I bet your POS is currently capable of tracking this, if you do the work to update it.Test your retrievability.

Can ChatGPT name your business if asked about your category in your neighborhood? What about Perplexity? If not, you’ve got work to do. Create a list of important actions for your business, built around products, services and things related to your business. Build prompts to ask the systems and judge whether you perform well or poorly.Think spatial, not just searchable.

Discovery now happens through visuals, motion, and presence. If you can’t be found in that layer, SEO alone won’t save you. You need to start thinking spatially and environmentally. If a consumer can see inside your business now (and they can) have you done everything you can to make the environment one they would want to visitDive deep on GenAI Search Optimization.

All the usual best practices being developed for being noticed and included in GenAI Search systems applies to local businesses as well. Manage content for chunability, structured data on your site helps, etc. A deeper dive into this topic can be found in my Chunked, Retrieved, Synthesized article.

Conclusion: Don’t Just Rank—Be Recognized

The next wave of local discovery is unfolding in front of us. Not through map packs. Not through “near me” searches.

Through smart glasses. Through agents. Through AI that sees where people are and suggests where they might go next. Through all the new platforms gathering hundreds of millions (and billions) of active users. What? You think 500m active daily users on ChatGPT are going to hop to Google or Bing Maps to do a local search? Do you think ChatGPT isn’t working on ways to deliver them a fuller answer to negate the need to go visit those maps? This change is happening now. Consumer behavior is changing and accelerating in new directions.

To win in that world, you’ll need more than rankings. You’ll need presence. Retrievability. Contextual clarity and agent-understood relevancy.

You’re not just optimizing content anymore. You’re designing for machine perception.

And the sooner you start, the more discoverable your future becomes.