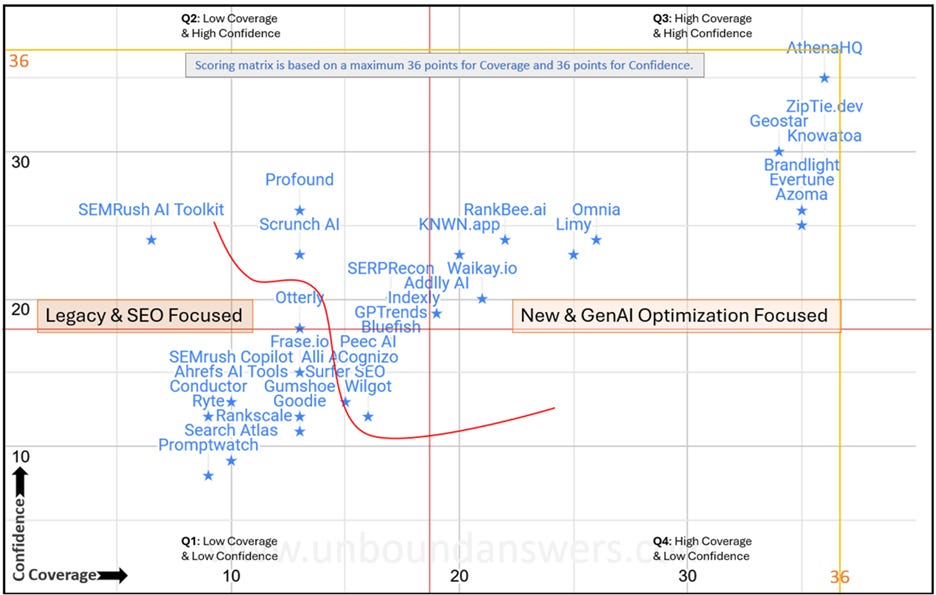

The GenAI Optimization Tool Quadrant: 30+ Toolsets: Coverage Scored, Confidence Tested

What work are they doing, and can you believe the marketing?

I have been working on this for months. It’s not perfect, but it’s a start, even if it is, essentially, my opinion (but hey, I did help build Bing Webmaster Tools, so maybe I know something about toolsets, data, workflows and such). Every tool in this quadrant is scored twice: first for how much practical GenAI SEO work it claims to support — that’s the Coverage — and again for how clearly and credibly it explains that work — that’s the Confidence. Together, these scores help indicate what’s really on offer before you spend a dime. I’ve also posted it on my site and will be keeping it updated as time goes on.

We’re in the season of big AI claims. If you work in SEO, you’ve probably seen more “AI-powered toolkits” pop up in your inbox in the last six months than in the last six years combined. Every vendor promises reduced work, to find you untapped success and to help you perform better in today’s AI-powered search world.

And every one of those tools wants you to believe they’re doing actual work, not just bolting a generic language model to a flashy dashboard. But when you peel back the marketing layer, how many of these new tools actually help you do the work that matters? (Spoiler: quite a few!)

That’s the question I’ve been tracking for months, and it’s why I built this quadrant you’re about to see. It maps more than 30 tools claiming to help businesses tackle optimization in the age of GenAI. And it tries to answer a simple question: what do these tools actually do, and how well do they explain how they do it?

Bit of expectation setting: if you think I bought access to all these tools and spent months using each one, you’ll be disappointed. My goal here is to help people starting their work on deciding which tools to spend their money on. So I’m approaching this from the outside, exactly as they would.

Everything I’ve tracked is based on information openly available from each company’s website; no free accounts, no free access. As I publish this, only a single tool company is even aware I’m doing this. So no one influenced any placements on this chart (The company who has seen it? They’re not at the top of the chart.).

Bottom line, this is my opinion based on the analysis I have done on openly available, public information. I don’t endorse any toolset or company, nor do I make any claims to the validity of their content, tools, output of work or results from that work.

So why build this map at all?

I spent years helping businesses figure out how to get search engines to notice them. And with my time at Bing, I have insight on the other side of the glass: not every promise made by a shiny new tool lines up with how search actually works.

Search fundamentals still matter: you still need clear signals, well-structured content, and trust. But what’s doing the heavy lifting has changed. It’s no longer just search engines crawling and ranking pages. Now, large language models retrieve, chunk, and synthesize information in ways the old systems didn’t. What’s changing is how your content gets surfaced. And whether the tools you pay for help you shape that outcome. Your workflows are shifting with this, so the tools need to evolve to keep up.

What this quadrant is (and isn’t)

Think of this chart as a compass, not a verdict. It won’t tell you the one perfect tool to buy tomorrow. It won’t crown a single “winner.” It shows you who’s covering the important bases, and maybe who just says they are. It’s easy to look top-right and claim “There’s the winner!”, but your reality is likely more nuanced than that. What work are you actually doing? What outcomes do you expect? Is a tool too complex? Is it reliable? Can you afford it? Those, and so many more questions need answering all over again as the toolset landscape changes. I’m hoping this quadrant chart will help you on your journey.

Here’s how it works, in plain English.

Coverage Score: This is the practical side. Out of a maximum of 36, how much real work does the tool enable you to do? Does it help you structure content for retrieval? Optimize your chunking? Track vector index presence? Or does it mostly stick to classic keyword advice in a new coat of paint? How many features does it have and what work do they say they do? I’m looking at features and whether each feature has usefulness (tells you to do the work) or utility (offers to do the work for you). More is more here.

Confidence Score: This is the credibility side. Does the tool provider show you how things actually work? Do they share case studies, technical documentation, detailed feature explainers, examples of real-world outcomes? Or do they just promise “AI magic” without explaining the mechanics? Is it all sales-funnel and no substance? Is it all “let’s talk”, where they get your time, and you get…what exactly? Based on the website, could you make a comfortable decision to buy access and justify it to your company?

A tool can score high on Coverage by claiming to do a lot, but if the explanations are thin, your confidence should be too. The opposite is true, too: some narrowly focused tools do one or two jobs very well and back it up with clear, practical detail. Those will show up with lower Coverage but higher Confidence.

Which is why YOUR winner may not automatically be in the top right of the chart. But, if you need a place to start, maybe that’s the right spot. You can work backwards based on your own time for demos and such.

Why 36?

The full scoring framework behind this quadrant covers twelve core metrics I believe matter for GenAI-era optimization. Each can contribute up to three points: one for the basics, one for mid-level capability, one for advanced support. Stack it up, and you get a maximum of 36 points for Coverage. Confidence runs on the same scale: clear, consistent explanations across the same functional areas.

I’m not publishing every single checkpoint. Not because I’m trying to guard a secret recipe (well, maybe a little bit), but because the details are less important than the point: we should see how much actual work a tool does, not just how good its marketing is. If you want to make smarter buying decisions, you need a map, not just a sales page.

Read it like a map, not a final scorecard

This is not a best-to-worst ranking. It’s directional. It gives you a place to start asking questions.

A tool in the upper-right (high Coverage, high Confidence) might genuinely help you tackle multiple layers of GenAI optimization at once. Or it might spread itself too thin. That’s for you to test and learn.

A tool that sits mid-pack but has one standout function might be perfect if your needs are aligned and specific. Maybe you just want better on-page recommendations and chunk structuring. You don’t need the overhead of a heavyweight suite promising vector index simulations and retrieval testing if you’ll never use them.

Same rule as SEO since day one: trust, but verify.

There’s a reason experienced SEOs don’t buy the biggest platform by default. You check the features, ask for a trial, test the output, run it against your own benchmarks. If it works, you keep it. If it doesn’t, you move on.

It’s the same here. The difference now is that the marketing layer has grown ten times thicker. Everyone has an AI badge on their homepage. Everyone says they’re solving the whole stack. Some might be. Some might not. This quadrant doesn’t make that decision for you, it gives you a signal so you can ask better questions.

Before you pay for any GenAI tool, dig deeper than the landing page. Find real documentation. See clear examples of how it fits your workflow. Know exactly which parts of the job it handles, and which parts you’re still on the hook for: does it just help with outlining? Does it surface chunks for better LLM retrieval? Does it test how your content appears in zero-click AI answers?

A tool might score well overall, but if you only care about a single function, something further down the chart could be the better fit. The goal is alignment, not hype.

What I’m watching next

I’ll keep adding new tools to this quadrant as they appear, and they’re appearing fast. If you see a tool missing here that deserves to be measured, tell me, but let’s set some expectations: I’m not running random toolset requests just to provide one-off answers for everyone.

If you suggest it, I’ll take a look and see if I think it should be on the chart, and update accordingly as time allows. If you’ve tested one that claims a lot but delivers little, I want to hear that too. Also, be careful suggesting toolsets that aren’t fully built out. This only works if we test live products. No sense scoring a toolset that’s only half developed, as it just hurts their score and isn’t representative.

On the other end of the spectrum, you can be sure that traditional SEO tools will not score well here. The signals I watch are aligned to track against the new optimization work, not traditional SEO work.

One last thing

AI isn’t magic. GenAI is changing how search works, faster than some want to admit, but underneath the buzzwords, we’re still solving the same puzzle: make it easier for the right information to be found, trusted, and surfaced at the right moment.

The difference now is that you need different tools, or at least, a clearer sense of what your tools are actually doing before you swipe the credit card. If this quadrant helps you ask sharper questions and avoid shiny half-promises, it’s done its job. Is it perfect? Hardly…but it’s a start.

Edit: turns out I am blind

and this AskedOnce.com #prost