Redefining Spam: From Spam Teams to Semantic Judgments

Google and Bing used rule books. GenAI just doesn’t need your content.

If you think spam is just keyword stuffing and shady back-links, you're living in the wrong decade.

Today, spam isn’t always human-readable garbage, it’s content that Generative AI search systems decide not to retrieve. That’s right. You might have written a decent piece. It might even rank well in traditional SERPs. But if it doesn’t pass the AI’s sniff test, it’s as good as invisible.

So how do GenAI-based search systems decide what’s spam, what’s low-quality, what’s worth retrieving and what gets left behind?

Let’s unpack what’s going on behind the scenes—and more importantly, what you can do about it.

How GenAI Search Works

Modern GenAI search engines like Perplexity (https://www.perplexity.ai), You.com (https://you.com), and Arc Search (https://arc.net) (and yes, Gemini, CoPilot and ChatGPT, too) aren’t ranking pages like traditional Google search does. They use a system called Retrieval-Augmented Generation, or RAG.

Here’s the simplified breakdown:

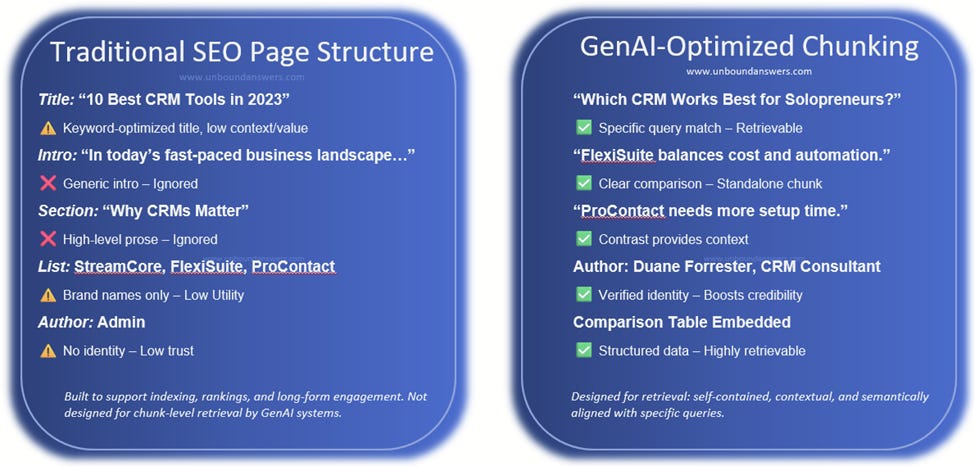

Your content gets chunked: split into smaller digestible sections.

Those chunks get embedded: converted into vectors, mathematical representations of meaning.

When someone types a query, the engine retrieves chunks closest in meaning to the query vector.

The AI generates an answer based on the retrieved chunks, and often paraphrases or cites them.

So… if your content doesn’t get retrieved in step 3? It might as well not exist.

That’s the new definition of “spam.” It’s not black-hat. It’s just content that’s filtered out by default.

In the past, platforms like Google and Bing staffed full-scale Spam Teams. These were human reviewers backed by hand-coded rules and penalties designed to catch manipulative tactics like keyword stuffing, cloaking, and link farming. These were overt systems with clear consequences: warnings, penalties, demotions. But in GenAI-driven search environments, there’s no red flag, and most likely no manual review queue. The filtering is invisible. These systems make semantic judgments at retrieval time, powered by embeddings, chunk scoring, and trust modeling. They don’t flag content as spam. They simply don’t use it. And for marketers, this is a much harder problem to manage. There’s no alert when your content disappears. No warning. No penalty. It just stops showing up, and you may never realize you’ve been filtered out.

In traditional search, you’d sometimes get an alert in your Google or Bing account, or you’d see your content fall in rank, or not get indexed. Overt clues of a problem. In GenAI-based search, unless a platform sets up a signal to alert you, you’ll never know. You’ll just never show up in a result.

What GenAI Considers Spammy (Even If It Isn’t)

Let’s be clear: GenAI systems are evaluating intent, but not yours as a content creator. They don’t care whether you wrote with good faith or tried to be helpful. Instead, they focus on whether your content is retrievable, trustworthy, and useful in response to a specific query.

The user’s intent, on the other hand, is a major part of the equation. These systems are constantly working to interpret what the searcher really means, refining ambiguous prompts, filtering unsafe inputs, and surfacing different types of answers based on perceived goals.

Intent matters, but it’s the user’s intent, not the author’s, that determines what gets retrieved. (Sidebar: YOU should always be working to know what your customer’s intent is!)

Which means your content can be skipped entirely, not because it’s “spammy,” but because it doesn’t meet the system’s evolving definition of what’s worth using.

Here’s what that looks like in practice:

1. Semantic Clutter

LLMs retrieve chunks based on semantic proximity. If your content is bloated with generalities, vague phrasing, or fluffy intros, it may fail to match any specific query well enough to surface.

Example: A 1,200-word blog post that dances around a question without ever answering it directly? That’s dead weight.

2. Embedding Collisions

If your content is too similar (vector-wise) to something already published (especially by higher-authority domains), your version may get filtered out as a duplicate or near-match.

This is what happens when people publish ChatGPT-written articles with slight rewrites: content that’s technically original, but indistinguishable from everything else. The embeddings are still close enough to existing material that it’s essentially redundant.

3. Weak Attribution

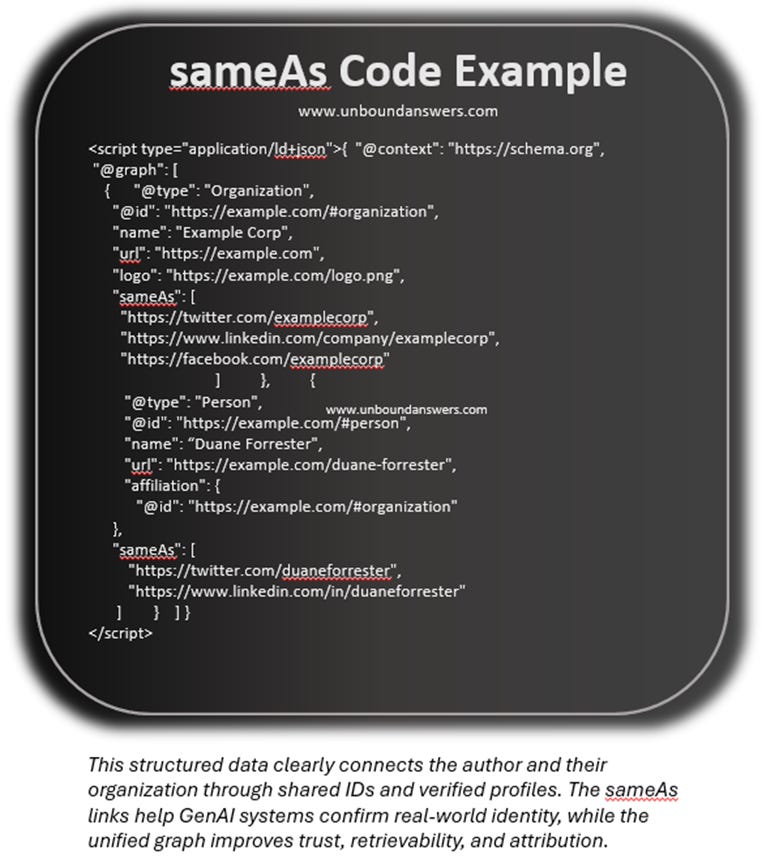

The model doesn’t just care about what was said, it cares about who said it and whether it can trust them. (Knowledge graphs in action, defining relationships.)

If there’s no structured data to back up your author identity, no strong entity relationships, and no trustworthy citations, your chunk may simply not qualify for retrieval.

4. Prompt Injection Fallout

A recent paper at EMNLP 2024 (“Ranking Manipulation for Conversational Search Engines”) shows how attackers can inject prompts into their content that game the retrieval process, causing spammy or misleading material to surface higher in GenAI search outputs.

And according to Barracuda Networks, more than 50% of email spam is now generated by AI models. That’s a major shift—not just in volume, but in sophistication.

Quick note: Yes, that Barracuda stat is about email spam. But it matters because it shows how GenAI is being used to generate manipulative content at scale. The same tactics, rewriting, prompt engineering, and semantic mimicry, are crossing over into web content. So while we're not talking about inbox junk in this article, we are talking about the same core issue: AI systems being flooded with content that looks helpful but breaks trust.

It’s not just low-effort spam anymore, it’s adversarial content engineered to hijack attention. That random competitor who suddenly dominates Perplexity.ai for your core keyword? It might not be organic. It might be adversarial manipulation.

This isn’t theoretical. The research team tested it across multiple engines and found that it worked. Which means your good content isn’t just competing with other good content, it’s now competing with junk that’s learned how to exploit the system.

How to Stay Visible When GenAI Systems Start Skipping You

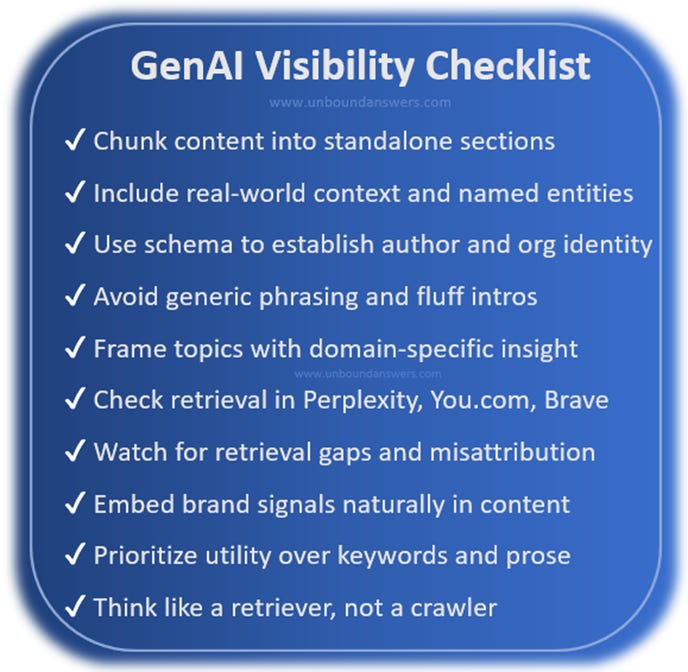

You’re not just publishing for people anymore. You’re publishing for systems that decide what gets pulled, what gets cited, and what gets used, and they don’t pull everything equally. If you want a checklist, one for GenAI search visibility might look like this:

If that’s not enough to get started with, here are more ways to make sure your content isn’t silently cut from the conversation:

1. Make Your Content Chunk-Friendly

You’re not optimizing entire pages anymore. You’re optimizing for self-contained sections. The kind GenAI systems can understand and retrieve in isolation.

Do this:

Use clear headers, subheaders, and structured markup

Include real-world context (product names, locations, tools, brands)

Avoid walls of text or bloated intros; start sharp and stay on topic

2. Stop Parroting, Start Framing

AI systems don’t need more summaries of what already exists, they need unique angles, novel inputs, and original phrasing.

Do this:

Share primary insights, unique analogies, and specific examples

Use niche language or technical framing specific to your domain

Frame topics with a clear point-of-view, not just surface-level information

Example: A RedMonk Conversation from August 12, 2024 titled “Generative AI is Changing How Developers Work (with Adam Seligman)” clearly demonstrates how domain-specific insight and real-world context can align with GenAI retrieval criteria. Because it’s expert-driven, conversational, and grounded in actual developer workflows, it’s precisely the kind of content that embedding-based systems are designed to pick up and reuse.

3. Structure Your Entity Identity

The more confidence a GenAI system has in who you are, the more likely it is to include your content in retrieval.

Do this:

Use Person and Organization schema with proper sameAs links

Include author bios with credentials (not fluff) on key pages

Reference yourself consistently across platforms and metadata

You’re not just trying to “build authority” in the abstract. You’re helping the retrieval system recognize you as a valid source.

4. Watch for Retrieval Gaps and Manipulations

Start actively monitoring how your content performs in GenAI search.

Do this:

Test how your content surfaces in Perplexity (https://www.perplexity.ai), You.com (https://you.com), and Brave Search (https://search.brave.com) using your brand terms, core topics, and common queries. These GenAI-powered tools offer varying levels of citation transparency and synthesis, making them useful for spotting what content is being retrieved, paraphrased, or skipped entirely.

Do manual checks to see who’s getting cited and create a checklist to understand why

Look for mis-attributions or odd brand exclusions that could hint at prompt manipulation

Also watch for competitors that surge out of nowhere. Not every win is organic.

5. Embed Brand Context Into Your Chunks

Don’t just say “We’re a software company.” Give the model something to work with.

Instead of this:

“We provide logistics solutions.”

Write this:

“AcmeLogix is a US-based logistics automation provider that helps mid-size freight companies reduce warehousing costs through predictive routing software.”

That gives the system context, entities, and substance, all of which help your chunk get picked when someone searches for “routing automation for freight carriers.”

This Is Spam Now

Let’s be real. Most of us still think spam is about links from bad-actor-domains and sites with 100% bounce rates, or even more overt black-hat tactics.

But GenAI is changing the definition.

Spam now means:

Content that’s too generic to be retrieved

Content that lacks source attribution

Content that’s framed identically to 10 other sources

Content that gets edged out by manipulative adversarial prompts

And because these systems don’t “penalize” you like Google used to (they just ignore you now) most brands won’t even realize they’re invisible until it’s too late.

Final Word: The Invisible Filter

You don’t need to outsmart the system. You need to stop sounding like everyone else.

Every piece of content you publish should pass one test: If a single chunk is pulled from the page, does it stand on its own? Does it deliver enough clarity, credibility, and value to be worth retrieving?

If the answer is no, it’s already invisible.

This isn’t about stuffing your brand into every chunk. It’s about being useful. The system knows where it came from. If it drops a citation, you’ll be credited, but that only happens if the content is worthwhile.

The future of search isn’t about volume. It’s about utility, as defined by machines.

And if your content doesn’t provide utility, it won’t be used.