Beyond Fan-Out: Turning Question Maps Into Real AI Retrieval

Query fan-out is a smart start — but real AI visibility demands testing, indexing, and authority work too.

If you spend time in SEO circles lately, you’ve probably heard query fan-out used in the same breath as semantic SEO, AI content, and vector-based retrieval. It sounds new, but it’s really an evolution of an old idea: a structured way to expand a root topic into the many angles your audience (and an AI) might explore. If this all sounds familiar, it should. Marketers have been digging for this depth since “search intent” became a thing years ago. The concept isn’t new, it just has fresh buzz thanks to GenAI.

Like many SEO concepts, fan-out has picked up hype along the way. Some people pitch it as a magic arrow for modern search (it’s not). Others call it just another keyword clustering trick dressed up for the GenAI era. The truth, as usual, sits in the middle: query fan-out is genuinely useful when used wisely, but it doesn’t magically solve the deeper layers of today’s AI-driven retrieval stack.

This guide sharpens that line. We’ll break down what query fan-out actually does, when it works best, where its value runs out, and which extra steps (and tools) fill in the critical gaps. If you want a full workflow from idea to real-world retrieval, this is your map.

What Query Fan-Out Really Is

Most marketers already do some version of this. You start with a core question like “How do you train for a marathon?” and break it into logical follow-ups: “How long should a training plan be?”, “What gear do I need?”, “How do I taper?” and so on.

In its simplest form, that’s fan-out. A structured expansion from root to branches. Where today’s fan-out tools step in is the scale and speed; they automate the mapping of related sub-questions, synonyms, adjacent angles, and related intents. Some visualize this as a tree or cluster. Others layer on search volumes or semantic relationships.

Think of it as the next step after the keyword list and the topic cluster. It helps you make sure you’re covering the terrain your audience, and the AI summarizing your content, expects to find.

Why Fan-Out Matters for GenAI SEO

This piece matters now because AI search and agent answers don’t pull entire pages the way a blue link used to work. Instead, they break your page into chunks: small, context-rich passages that answer precise questions.

This is where fan-out earns its keep. Each branch on your fan-out map can be a stand-alone chunk. The more relevant branches you cover, the deeper your semantic density, which can help with:

1. Strengthening Semantic Density

A page that touches only the surface of a topic often gets ignored by an LLM. If you cover multiple related angles clearly and tightly, your chunk looks stronger semantically. More signals tell the AI that this passage is likely to answer the prompt.

2. Improving Chunk Retrieval Frequency

The more distinct, relevant sections you write, the more chances you create for an AI to pull your work. Fan-out naturally structures your content for retrieval.

3. Boosting Retrieval Confidence

If your content aligns with more ways people phrase their queries, it gives an AI more reason to trust your chunk when summarizing. This doesn’t guarantee retrieval, but it helps with alignment.

4. Adding Depth for Trust Signals

Covering a topic well shows authority. That can help your site earn trust, which nudges retrieval and citation in your favor.

Fan-Out Tools: Where to Start Your Expansion

Query fan-out is practical work, not just theory. You need tools that take a root question and break it into every related sub-question, synonym, and niche angle your audience (or an AI) might care about. A solid fan-out tool doesn’t just spit out keywords; it shows connections and context, so you know where to build depth.

Below are reliable, easy-to-access tools you can plug straight into your topic research workflow:

• AnswerThePublic: The classic question cloud. Visualizes what, how, and why people ask around your seed topic.

• AlsoAsked: Builds clean question trees from live Google People Also Ask data.

• Frase: Topic Research module clusters root queries into sub-questions and outlines.

• Keyword Insights: Groups keywords and questions by semantic similarity, great for mapping searcher intent.

• SEMRush Topic Research: Big-picture tool for surfacing related subtopics, headlines, and question ideas.

• Answer Socrates: Fast People Also Ask scraper, cleanly organized by question type.

• LowFruits: Pinpoints long-tail, low-competition variations to expand your coverage deeper.

• WriterZen: Topic Discovery clusters keywords and builds related question sets in an easy-to-map layout.

If you’re short on time, start with AlsoAsked for quick trees or Keyword Insights for deeper clusters. Both deliver instant ways to spot missing angles. Now, having a clear fan-out tree is only step one. Next comes the real test: proving that your chunks actually show up where AI agents look.

Where Fan-Out Stops Working Alone

So fan-out is helpful. But it’s only the first step. Some people stop here, assuming a complete query tree means they’ve future-proofed their work for GenAI. That’s where the trouble starts.

Fan-out does not verify if your content is actually getting retrieved, indexed, or cited. It doesn’t run real tests with live models. It doesn’t check if a vector database knows your chunks exist. It doesn’t solve crawl or schema problems either.

Put plainly: fan-out expands the map. But a big map is worthless if you don’t check the roads, the traffic, or whether your destination is even open.

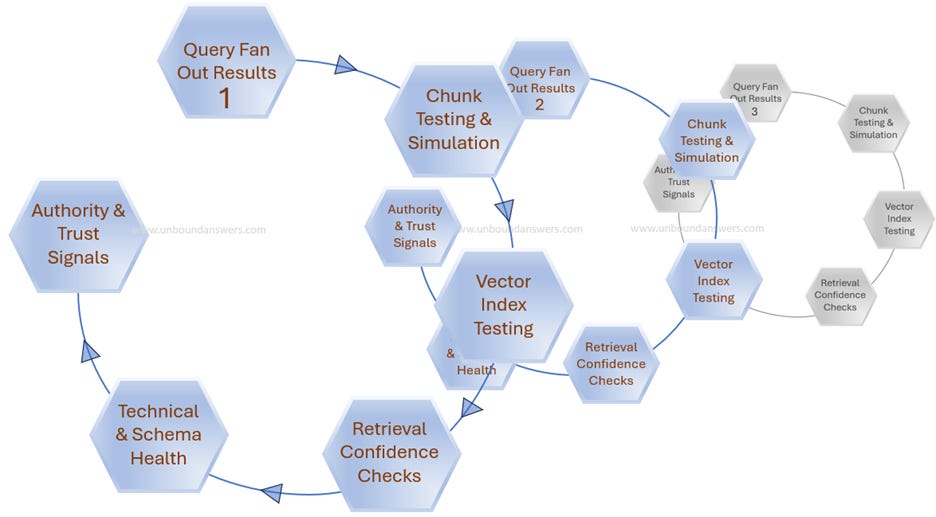

The Practical Next Steps: Closing the Gaps

Once you’ve built a great fan-out tree and created solid chunks, you still need to make sure they work. This is where modern GenAI SEO moves beyond traditional topic planning. The key is to verify, test, and monitor how your chunks behave in real conditions.

Below is a practical list of the extra work that brings fan-out to life, with real tools you can try for each piece.

1. Chunk Testing & Simulation

You want to know: Does an LLM actually pull my chunk when someone asks a question? Prompt testing and retrieval simulation give you that window.

Tools you can try:

• LlamaIndex: Popular open-source framework for building and testing RAG pipelines. Helps you see how your chunked content flows through embeddings, vector storage, and prompt retrieval.

• Otterly: Practical, non-dev tool for running live prompt tests on your actual pages. Shows which sections get surfaced and how well they match the query.

• Perplexity Pages: Not a testing tool in the strict sense, but useful for seeing how a real AI assistant surfaces or summarizes your live pages in response to user prompts.

2. Vector Index Presence

Your chunk must live somewhere an AI can access. In practice, that means storing it in a vector database. Running your own vector index is how you test that your content can be cleanly chunked, embedded, and retrieved using the same similarity search methods that larger GenAI systems rely on behind the scenes. You can’t see inside another company’s vector store, but you can confirm your pages are structured to work the same way.

Tools to help:

• Weaviate: Open-source vector DB for experimenting with chunk storage and similarity search.

• Pinecone: Fully managed vector storage for larger scale indexing tests.

• Qdrant: Good option for teams building custom retrieval flows.

3. Retrieval Confidence Checks

How likely is your chunk to win out against others?

This is where prompt-based testing and retrieval scoring frameworks come in. They help you see whether your content is actually retrieved when an LLM runs a real-world query, and how confidently it matches the intent.

Tools worth looking at:

• Ragas: Open-source framework for scoring retrieval quality. Helps test if your chunks return accurate answers and how well they align with the query.

• Haystack: Developer-friendly RAG framework for building and testing chunk pipelines. Includes tools for prompt simulation and retrieval analysis.

• Otterly: Non-dev tool for live prompt testing on your actual pages. Shows which chunks get surfaced and how well they match the prompt.

4. Technical & Schema Health

No matter how strong your chunks are, they’re worthless if search engines and LLMs can’t crawl, parse, and understand them. Clean structure, accessible markup, and valid schema keep your pages visible and make chunk retrieval more reliable down the line.

Tools to help:

· Ryte: Detailed crawl reports, structural audits, and deep schema validation; excellent for finding markup or rendering gaps.

· Screaming Frog: Classic SEO crawler for checking headings, word counts, duplicate sections, and link structure: all cues that affect how chunks are parsed.

· Sitebulb: Comprehensive technical SEO crawler with robust structured data validation, clear crawl maps, and helpful visuals for spotting page-level structure problems.

5. Authority & Trust Signals

Even if your chunk is technically solid, an LLM still needs a reason to trust it enough to cite or summarize it. That trust comes from clear authorship, brand reputation, and external signals that prove your content is credible and well-cited. These trust cues must be easy for both search engines and AI agents to verify.

Tools to back this up:

• Authory: Tracks your authorship, keeps a verified portfolio, and monitors where your articles appear.

• SparkToro: Helps you find where your audience spends time and who influences them, so you can grow relevant citations and mentions.

• Perplexity Pro: Lets you check whether your brand or site appears in AI answers, so you can spot gaps or new opportunities.

Query fan-out expands the plan. Retrieval testing proves it works.

Putting It All Together: A Smarter Workflow

When someone asks, “Does query fan-out really matter?” the answer is yes, but only as a first step. Use it to design a strong content plan and to spot angles you might miss. But always connect it to chunk creation, vector storage, live retrieval testing, and trust-building.

Here’s how that looks in order:

Expand: Use fan-out tools like AlsoAsked or AnswerThePublic.

Draft: Turn each branch into a clear, stand-alone chunk.

Check: Run crawls and fix schema issues.

Store: Push your chunks to a vector DB.

Test: Use prompt tests and RAG pipelines.

Monitor: See if you get cited or retrieved in real AI answers.

Refine: Adjust coverage or depth as gaps appear.

The Bottom Line

Query fan-out is a valuable input, but it’s never been the whole solution. It helps you figure out what to cover, but it does not prove what gets retrieved, read, or cited.

As GenAI-powered discovery keeps growing, smart marketers will build that bridge from idea to index to verified retrieval. They’ll map the road, pave it, watch the traffic, and adjust the route in real time.

So next time you hear fan-out pitched as a silver bullet, you don’t have to argue. Just remind people of the bigger picture: the real win is moving from possible coverage to provable presence.

If you do that work (with the right checks, tests, and tools) your fan-out map actually leads somewhere useful.

Thanks for putting this together. While there is so much information available which is overwhelming, this piece has explained new norms in simple language

Thank you :)